The Genesis of Flonto

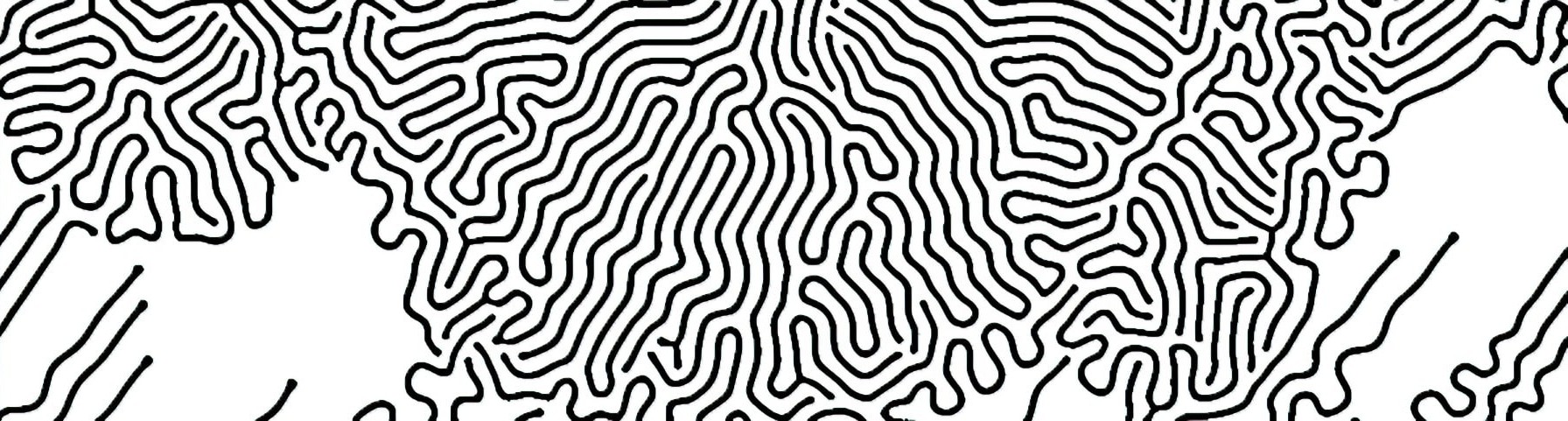

Flonto started as a mobile game prototype during my freshman year. Simple idea: fluid-like enemy using reaction-diffusion systems, shoot stuff, try not to die. Classic.

The enemy’s behavior was driven by a reaction-diffusion system, inspired by Karl Sims’ work (https://karlsims.com/rd.html).

Initially, Flonto was built on:

- Unity engine

- Burst compiler

- Unity Jobs system

Here’s a glimpse of the old implementation:

[BurstCompile]

public struct ParallelJob : IJobParallelFor

{

public NativeArray<float> A;

public NativeArray<float> B;

public NativeArray<float> Alaplacian;

public NativeArray<float> Blaplacian;

public float dA;

public float dB;

public float f;

public float k;

public void Execute(int index)

{

A[index] = A[index] + (dA * Alaplacian[index] - (A[index] * B[index] * B[index]) + (f * (1 - A[index])));

B[index] = B[index] + (dB * Blaplacian[index] + (A[index] * B[index] * B[index]) - ((k + f) * B[index]));

}

}

This approach had its merits:

- Learned multithreading basics

- Decent performance even on mobile

But it also had significant drawbacks:

- CPU-bound, limiting scalability/performance

- Complex C# code for parallel processing

Fast forward to now. I’m eyeing a rewrite with GLSL in Unity. Why?

- Better performance

- I actually know what I’m doing now (debatable)

- Shaders are cool

Gonna dig up the old code, laugh a bit, cry a bit, then rewrite the whole thing. Goals:

- Port the reaction-diffusion to GLSL

- Make it run on more than just phones

- Maybe add some new features (or not, we’ll see)

Shader "reaction-diffusion/StepShader"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

_f("f", Range (0.01, 0.09)) = 0.0

_k("k", Range (0.03, 0.07)) = 0.04

_du("du", float) = 0.2

_dv("dv", float) = 0.1

}

SubShader

{

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

// ... (vertex shader and other setup)

float4 frag (v2f i) : SV_Target

{

// Laplacian calculation

float2 laplacian = float2(0.0, 0.0);

for(int j=0; j<8; ++j) {

// ... laplacian calculation

}

// Reaction-diffusion step

float2 val = tex2D(_MainTex, i.uv).xy;

float2 delta = float2(

_du * laplacian.x - val.x*val.y*val.y + _f * (1.0-val.x),

_dv * laplacian.y + val.x*val.y*val.y - (_k + _f) * val.y);

return float4((val + delta).x, (val + delta).y, 0, 0);

}

ENDCG

}

}

}

To make this work in Unity, we use a technique called ping-pong rendering.

// Ping texture

RenderTexture texA = new RenderTexture(width, height, 0);

// Pong texture

RenderTexture texB = new RenderTexture(width, height, 0);

void Update() {

// Flip 'em

Graphics.Blit(texA, texB, reactionDiffusionMaterial);

SwapTextures(ref texA, ref texB);

}

Why not to use compute shaders? WebGL hates compute shaders. But who’s making web games in 2023 anyway? (Oh wait, we are.) But at least, no more C# Jobs headaches.